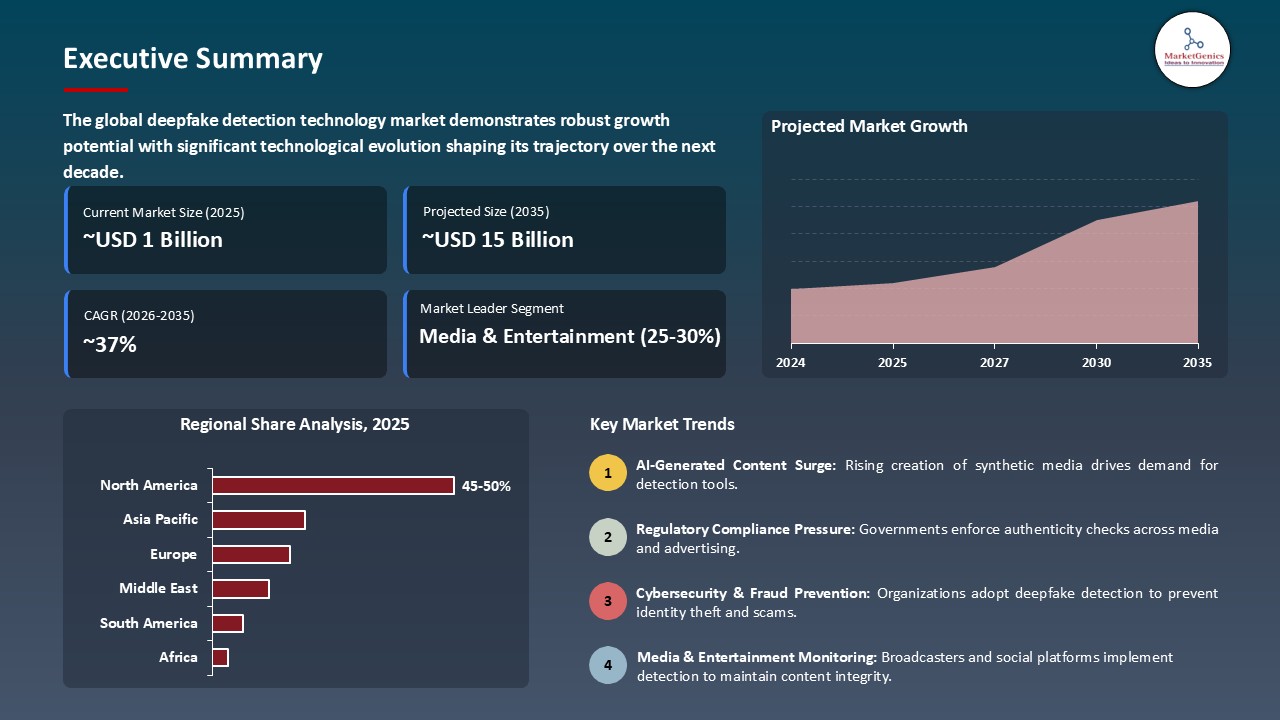

- The global deepfake detection technology market is valued at USD 0.6 billion in 2025.

- The market is projected to grow at a CAGR of 37.2% during the forecast period of 2026 to 2035.

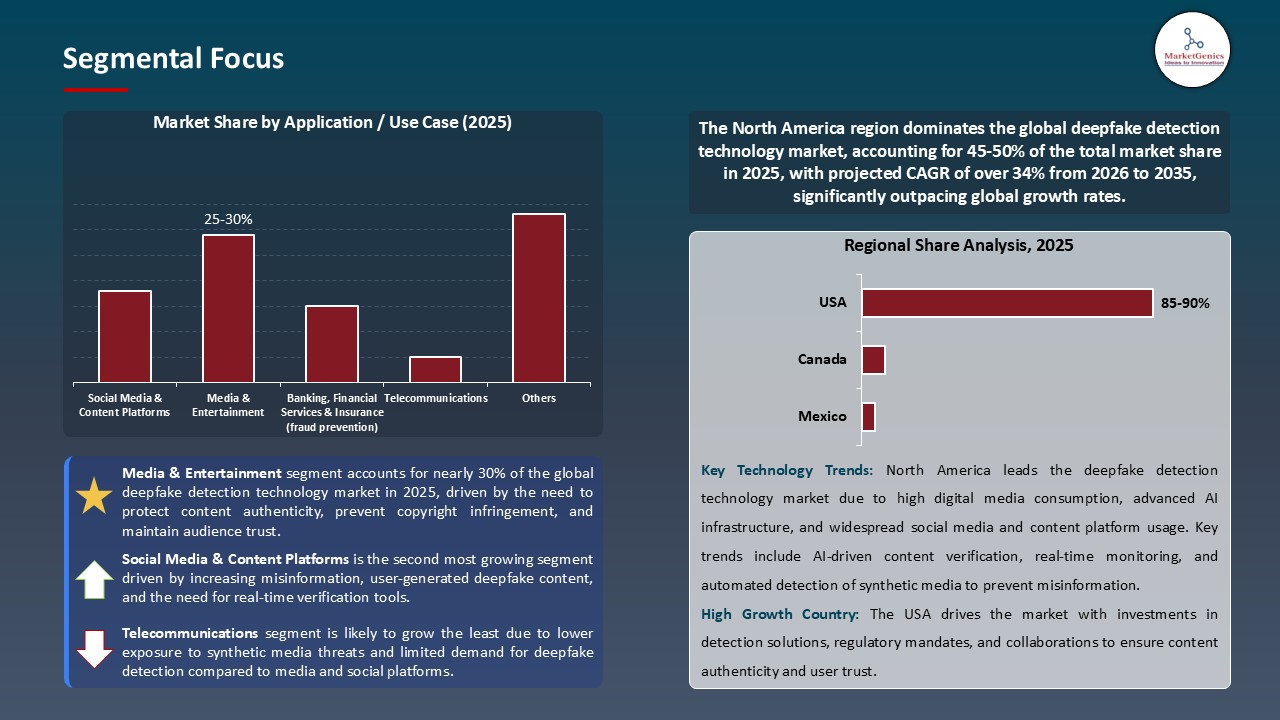

- The media & entertainment segment accounts for ~29% of the global deepfake detection technology market in 2025, driven by growing dangers of synthetic content and the necessity for dependable authenticity verification on digital platforms.

- The market for deepfake detection is expanding as companies strive to combat misinformation, identity theft, and altered media.

- Progress in multimodal forensics, GAN anomaly identification, and real-time video validation technologies is enhancing synthetic content identification in various industries

- The global deepfake-detection-technology-market is highly consolidated, with the top five players accounting for nearly 55% of the market share in 2025.

- In April 2025, X-PHY Inc. introduced its Deepfake Detector, an extremely fast, local device detection that does not require a network connection and thus allows the user to maintain his privacy.

- In July 2025, Cyabra Strategy Ltd. unveiled a deepfake detection module that could be integrated into their platform for disinformation, which is powered by AI models.

- Global deepfake detection technology market is likely to create the total forecasting opportunity of USD 14.5 Bn till 2035

- North America is most attractive region, supported by substantial major investments in AI forensics, media authentication, and synthetic-media risk mitigation.

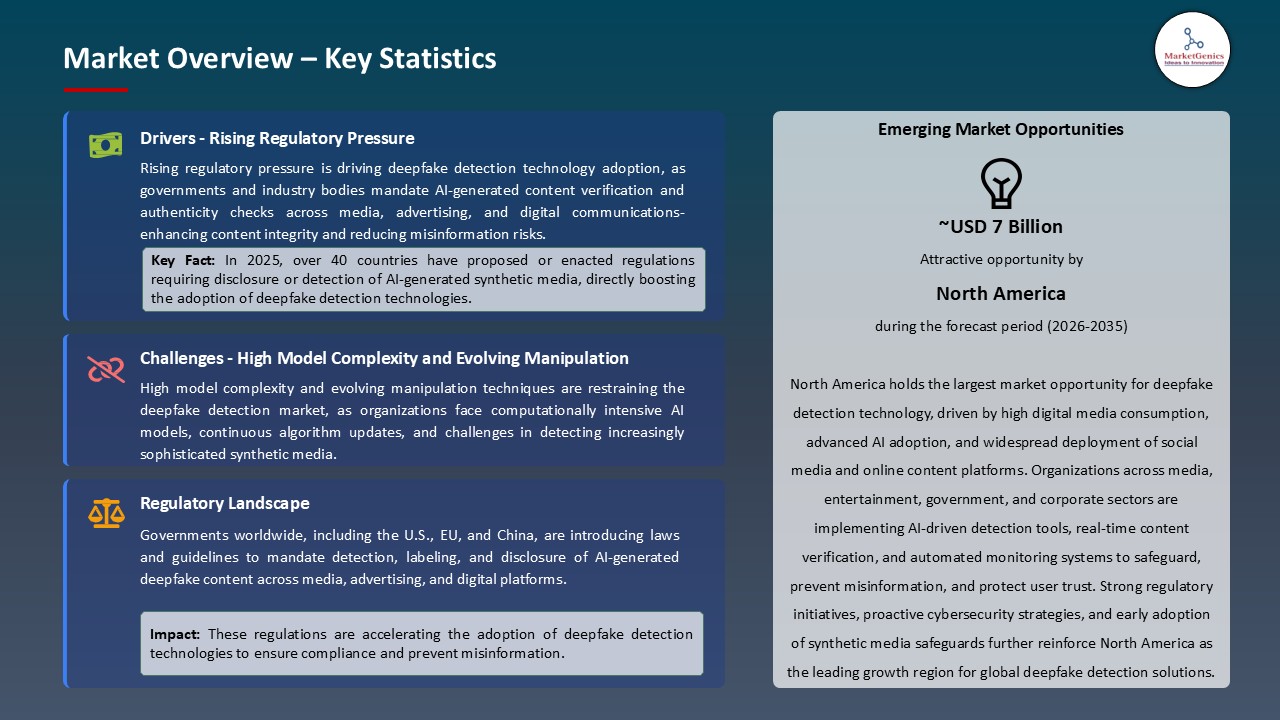

- Harder rules against the wrong use of synthetic media are being introduced by governments and regulators all over the world. This is leading to the quick implementation of tools for detecting deepfakes on different platforms. Acts like the EU AI Act and the FCC's 2024 decision to prohibit AI-generated political robocalls are some of the reasons why companies need to upgrade their media authentication systems. All these factors are likely to boost the growth of global deepfake detection technology market.

- Meta enhanced its AI-powered Content Provenance Initiative (CPI) in March 2025 to recognize the audio-video manipulations in content shared on Facebook and Instagram, thereby facilitating the whole industry's adherence to the authenticity standards set by the sector.

- Further, the risk of election manipulation, financial fraud, and identity theft has led, on the one hand, the public sector and on the other hand, private organizations, to allocate more resources to the development of automated media integrity pipelines that enable them to meet safety, transparency, and accountability requirements.

- Driven by the rapid changes of generative models, friction has been experienced with the adoption of the technology, hence constant retraining is required together with a heavy computational power. Most of these organizations are not in the position to offer the necessary facilities for carrying out large-scale forensic models in real-time. Therefore, it is anticipated to hampers the growth of global deepfake detection technology market.

- However, there is still a limitation in cross-platform standardization, and the integration of detection workflows into old content moderation systems that are being used at a higher operational level makes the work more complex.

- The complexity of multimodal deepfakes, for instance, text-to-video and voice clones, results in a very high probability of false positives/negatives, thus the adoption process in a department with high-stakes such as newsrooms and legal investigations is getting slower.

- In order to secure communication channels, enterprises are leveraging deepfake detection to not only safeguard the identity of the leadership but also to protect the brand reputation. Banks and fintechs are incorporating voice-clone detection in their verification calls to lessen the occurrence of social-engineering fraud. Therefore, it is likely to creates a lucrative opportunity for the deepfake detection technology market across the globe.

- One of the effects of government-supported provenance measures like the Coalition for Content Provenance and Authenticity (C2PA) is the creation of new avenues for the detection vendors to implement cryptographic watermarking, origin metadata, and tamper-proof audit trails.

- The Asia-Pacific and the Middle East regions, which are identified as the next hot markets for media authentication, are heavily investing in national-level media authentication systems to counter disinformation and thus, solution providers with scalable cloud-based forensic AI expertise get new opportunities.

- AI/ML-powered detection models with multimodal analysis - video, audio, facial micro-expressions, and linguistic cues-are being heavily relied upon to locate the most intricate synthetic media. Content moderation pipelines at large platforms have now incorporated real - time risk scoring and anomaly detection as their tools.

- The quick use of watermarking, digital signatures, and provenance frameworks is changing the whole media verification way. In January 2025, Google extended its SynthID watermarking system to include video and audio, thus facilitating traceability across generative models and greatly speeding up the industry's move to authenticated content ecosystems.

- The interlocking of cryptographic methods, automated forensics, and trust framework standards is leading to the fast worldwide use of deepfake detection technologies as a means of keeping digital trust safe.

- Media and publishing entities have been quick in response to deepfake detection tools, a measure necessitated by the escalating wave of AI-generated fabrications and manipulations that platforms endure. To this end, broadcasters and newsrooms have instituted automated verification pipelines as a means to confirm the authenticity of user-generated visuals before sharing them with the public. An illustration of such an effort is the BBC, which in 2024, extended its Project Origin architecture to enhance content provenance and newsroom integrity.

- In part, the improvements in AI/ML forensics that facilitate the pinpointing of a person's micro-expression on the face, anomaly detection in the audio waveform, and manipulation at a pixel level even in real-time, are contributing towards noticing the forgery with high precision. Along with this, companies like Adobe, Meta, and Microsoft are adopting C2PA-supported authenticity metadata to ease content verification for publishers in large volumes.

- Acts such as the EU AI Act and forthcoming U.S. regulations that require synthetic media disclosure, hence, are the principal reasons for the deepfake technologies adoption, making the detection of such media necessary for compliance and brand safety. Media outlets and fact-checkers can now avail themselves of the detection tools in the form of scalable APIs and SDKs from Truepic, DeepMedia, and Sentinel AI, thereby supporting the seamless integration of deepfake examination in their editorial and distribution workflows.

- Deepfake detection technology market is mainly led by North America. The region is supported by substantial major investments in AI forensics, media authentication, and synthetic-media risk mitigation. To curb the situation of AI-generated political robocalls, in which a fake voice of a politician is used, the U.S. Federal Communications Commission (FCC) made a historical decision in February 2024. This prompted a demand for audio deepfake detection tools to be used in telecom and public-sector systems all over the country.

- The region has also been able to maintain its lead due to cross-industry collaboration, whereby companies like Microsoft, Google, Adobe, and Meta cooperating with the Coalition for Content Provenance and Authenticity (C2PA) use standardized provenance metadata to track down the manipulated images, videos, and audio on a large scale.

- In Canada, the Canadian Centre for Cyber Security (CCCS), along with the academic partners, is leading the way in research in media forensics of synthetic materials to support the national defense against disinformation and to help news organizations and law enforcement agencies.

- In April 2025, X-PHY Inc. introduced its Deepfake Detector, an extremely fast, local device detection that does not require a network connection and thus allows the user to maintain his privacy. The tool utilizes multimodal AI to analyze video, image, and audio streams and can identify the manipulated media with an accuracy of up to 90%.

- In July 2025, Cyabra Strategy Ltd. unveiled a deepfake detection module that could be integrated into their platform for disinformation, which is powered by AI models that are twofold: PixelProof for image spatiotemporal inconsistencies and MotionProof for detecting unnatural video motion. Their tool generates instant confidence scores along with visual heatmaps, enabling governments and brands to authenticate content and thus, effectively, to be one step ahead of synthetic media threats.

- United States

- Canada

- Mexico

- Germany

- United Kingdom

- France

- Italy

- Spain

- Netherlands

- Nordic Countries

- Poland

- Russia & CIS

- China

- India

- Japan

- South Korea

- Australia and New Zealand

- Indonesia

- Malaysia

- Thailand

- Vietnam

- Turkey

- UAE

- Saudi Arabia

- Israel

- South Africa

- Egypt

- Nigeria

- Algeria

- Brazil

- Argentina

- Adobe

- Amber Video

- Clarifai

- Serelay

- Deepware Scanner

- Giant Oak

- SRI International

- HIVE

- InVID / WeVerify

- Starling Labs

- Meta

- Microsoft

- Pindrop

- Respeecher

- Truepic

- Reality Defender

- Sensity (formerly Deeptrace)

- Two Hat

- ZeroFOX

- Others Key Players

- Solutions

- Deepfake Detection Software

- Video Deepfake Detection Software

- Image Deepfake Detection Software

- Audio/Voice Deepfake Detection Software

- Text/Synthetic Text Detection Software

- Multi-modal Detection Software

- Others

- Authentication & Verification Platforms

- Digital Provenance Tracking Platforms

- Media Authenticity Verification Platforms

- Tamper Detection & Integrity Monitoring Platforms

- Others

- AI/ML Detection Engines

- Model-based Detection Engines

- Forensic Feature-based Engines

- Hybrid/Ensemble Detection Engines

- Others

- Blockchain & Watermarking Tools

- Cryptographic Watermarking Tools

- Digital Fingerprinting Tools

- Content Certification Tools

- Others

- Services

- Professional Services

- Consulting & Assessment Services

- Digital Forensics & Investigation Services

- Integration & Implementation Services

- Custom AI Model Development

- Others

- Training & Support

- User Training & Certification

- Analyst Training (for verification teams)

- Technical Support & Maintenance

- Others

- Managed Services

- Managed Detection & Monitoring Services

- Continuous Verification-as-a-Service

- Outsourced Forensics Services

- Others

- Platforms & APIs

- API-Based Deepfake Detection

- Video Detection APIs

- Image Detection APIs

- Voice/Speech Detection APIs

- Text/Synthetic Content Detection APIs

- Others

- SDKs & Developer Toolkits

- Mobile SDKs

- Web SDKs

- Enterprise Integration SDKs

- Others

- Cloud Platforms

- Cloud-native Detection Platforms

- Model Hosting & Model-Inference Platforms

- Scalable Compute Platforms for Heavy Detection

- Others

- Cloud-Based

- On-Premises

- Hybrid

- Video deepfake detection

- Image (photo) deepfake detection

- Audio / voice deepfake detection

- Text / synthetic text detection

- Multi-modal detection (combined audio-video-text)

- Others

- AI/ ML-based (CNNs, RNNs, Transformers)

- CNNs

- RNNs

- Transformers

- Others

- Forensic Feature Analysis

- Blockchain/ Provenance-based Verification

- Watermarking & Fingerprinting

- Hybrid/ Ensemble Methods

- Others

- Real-time / Live-stream detection

- Post-event / forensic analysis

- Content authentication / provenance

- Source verification / origin tracing

- Deepfake prevention / tampering alerts

- Others

- Large enterprises

- Small & Medium-sized Enterprises (SMEs)

- Individual users / consumers (verification apps)

- Media & Entertainment

- Social Media & Content Platforms

- Banking, Financial Services & Insurance (fraud prevention)

- Government & Public Sector (elections, national security)

- Law Enforcement & Legal / eDiscovery

- Healthcare & Telemedicine

- Advertising & Brand Protection

- Education & Research

- Enterprise Communications (internal security)

- Telecommunications

- Others

- BFSI

- Government & Defense

- Media & Entertainment

- Technology & IT Services

- Healthcare & Life Sciences

- Education

- Retail & E-commerce

- Legal & Compliance

- Telecom & OTT Services

- Advertising & Marketing

- Others

- 1. Research Methodology and Assumptions

- 1.1. Definitions

- 1.2. Research Design and Approach

- 1.3. Data Collection Methods

- 1.4. Base Estimates and Calculations

- 1.5. Forecasting Models

- 1.5.1. Key Forecast Factors & Impact Analysis

- 1.6. Secondary Research

- 1.6.1. Open Sources

- 1.6.2. Paid Databases

- 1.6.3. Associations

- 1.7. Primary Research

- 1.7.1. Primary Sources

- 1.7.2. Primary Interviews with Stakeholders across Ecosystem

- 2. Executive Summary

- 2.1. Global Deepfake Detection Technology Market Outlook

- 2.1.1. Deepfake Detection Technology Market Size (Value - US$ Bn), and Forecasts, 2021-2035

- 2.1.2. Compounded Annual Growth Rate Analysis

- 2.1.3. Growth Opportunity Analysis

- 2.1.4. Segmental Share Analysis

- 2.1.5. Geographical Share Analysis

- 2.2. Market Analysis and Facts

- 2.3. Supply-Demand Analysis

- 2.4. Competitive Benchmarking

- 2.5. Go-to- Market Strategy

- 2.5.1. Customer/ End-use Industry Assessment

- 2.5.2. Growth Opportunity Data, 2026-2035

- 2.5.2.1. Regional Data

- 2.5.2.2. Country Data

- 2.5.2.3. Segmental Data

- 2.5.3. Identification of Potential Market Spaces

- 2.5.4. GAP Analysis

- 2.5.5. Potential Attractive Price Points

- 2.5.6. Prevailing Market Risks & Challenges

- 2.5.7. Preferred Sales & Marketing Strategies

- 2.5.8. Key Recommendations and Analysis

- 2.5.9. A Way Forward

- 2.1. Global Deepfake Detection Technology Market Outlook

- 3. Industry Data and Premium Insights

- 3.1. Global Information Technology & Media Ecosystem Overview, 2025

- 3.1.1. Information Technology & Media Industry Analysis

- 3.1.2. Key Trends for Information Technology & Media Industry

- 3.1.3. Regional Distribution for Information Technology & Media Industry

- 3.2. Supplier Customer Data

- 3.3. Technology Roadmap and Developments

- 3.1. Global Information Technology & Media Ecosystem Overview, 2025

- 4. Market Overview

- 4.1. Market Dynamics

- 4.1.1. Drivers

- 4.1.1.1. Rising demand for content authenticity verification across media, entertainment, and social platforms

- 4.1.1.2. Growing adoption of AI-driven real-time monitoring and detection tools by enterprises and governments

- 4.1.1.3. Increasing regulatory mandates for AI-generated content disclosure and misinformation prevention

- 4.1.2. Restraints

- 4.1.2.1. High model complexity and computational costs of deepfake detection algorithms

- 4.1.2.2. Rapidly evolving synthetic media and manipulation techniques making detection challenging

- 4.1.1. Drivers

- 4.2. Key Trend Analysis

- 4.3. Regulatory Framework

- 4.3.1. Key Regulations, Norms, and Subsidies, by Key Countries

- 4.3.2. Tariffs and Standards

- 4.3.3. Impact Analysis of Regulations on the Market

- 4.4. Value Chain Analysis

- 4.4.1. Data/ Algorithms Suppliers

- 4.4.2. System Integrators/ Technology Providers

- 4.4.3. Deepfake Detection Technology Providers

- 4.4.4. End Users

- 4.5. Cost Structure Analysis

- 4.5.1. Parameter’s Share for Cost Associated

- 4.5.2. COGP vs COGS

- 4.5.3. Profit Margin Analysis

- 4.6. Pricing Analysis

- 4.6.1. Regional Pricing Analysis

- 4.6.2. Segmental Pricing Trends

- 4.6.3. Factors Influencing Pricing

- 4.7. Porter’s Five Forces Analysis

- 4.8. PESTEL Analysis

- 4.9. Global Deepfake Detection Technology Market Demand

- 4.9.1. Historical Market Size –Value (US$ Bn), 2020-2024

- 4.9.2. Current and Future Market Size –Value (US$ Bn), 2026–2035

- 4.9.2.1. Y-o-Y Growth Trends

- 4.9.2.2. Absolute $ Opportunity Assessment

- 4.1. Market Dynamics

- 5. Competition Landscape

- 5.1. Competition structure

- 5.1.1. Fragmented v/s consolidated

- 5.2. Company Share Analysis, 2025

- 5.2.1. Global Company Market Share

- 5.2.2. By Region

- 5.2.2.1. North America

- 5.2.2.2. Europe

- 5.2.2.3. Asia Pacific

- 5.2.2.4. Middle East

- 5.2.2.5. Africa

- 5.2.2.6. South America

- 5.3. Product Comparison Matrix

- 5.3.1. Specifications

- 5.3.2. Market Positioning

- 5.3.3. Pricing

- 5.1. Competition structure

- 6. Global Deepfake Detection Technology Market Analysis, by Component

- 6.1. Key Segment Analysis

- 6.2. Deepfake Detection Technology Market Size (Value - US$ Bn), Analysis, and Forecasts, by Component, 2021-2035

- 6.2.1. Solutions

- 6.2.1.1. Deepfake Detection Software

- 6.2.1.1.1. Video Deepfake Detection Software

- 6.2.1.1.2. Image Deepfake Detection Software

- 6.2.1.1.3. Audio/Voice Deepfake Detection Software

- 6.2.1.1.4. Text/Synthetic Text Detection Software

- 6.2.1.1.5. Multi-modal Detection Software

- 6.2.1.1.6. Others

- 6.2.1.2. Authentication & Verification Platforms

- 6.2.1.2.1. Digital Provenance Tracking Platforms

- 6.2.1.2.2. Media Authenticity Verification Platforms

- 6.2.1.2.3. Tamper Detection & Integrity Monitoring Platforms

- 6.2.1.2.4. Others

- 6.2.1.3. AI/ML Detection Engines

- 6.2.1.3.1. Model-based Detection Engines

- 6.2.1.3.2. Forensic Feature-based Engines

- 6.2.1.3.3. Hybrid/Ensemble Detection Engines

- 6.2.1.3.4. Others

- 6.2.1.4. Blockchain & Watermarking Tools

- 6.2.1.4.1. Cryptographic Watermarking Tools

- 6.2.1.4.2. Digital Fingerprinting Tools

- 6.2.1.4.3. Content Certification Tools

- 6.2.1.4.4. Others

- 6.2.1.1. Deepfake Detection Software

- 6.2.2. Services

- 6.2.2.1. Professional Services

- 6.2.2.1.1. Consulting & Assessment Services

- 6.2.2.1.2. Digital Forensics & Investigation Services

- 6.2.2.1.3. Integration & Implementation Services

- 6.2.2.1.4. Custom AI Model Development

- 6.2.2.1.5. Others

- 6.2.2.2. Training & Support

- 6.2.2.2.1. User Training & Certification

- 6.2.2.2.2. Analyst Training (for verification teams)

- 6.2.2.2.3. Technical Support & Maintenance

- 6.2.2.2.4. Others

- 6.2.2.3. Managed Services

- 6.2.2.3.1. Managed Detection & Monitoring Services

- 6.2.2.3.2. Continuous Verification-as-a-Service

- 6.2.2.3.3. Outsourced Forensics Services

- 6.2.2.3.4. Others

- 6.2.2.1. Professional Services

- 6.2.3. Platforms & APIs

- 6.2.3.1. API-Based Deepfake Detection

- 6.2.3.1.1. Video Detection APIs

- 6.2.3.1.2. Image Detection APIs

- 6.2.3.1.3. Voice/Speech Detection APIs

- 6.2.3.1.4. Text/Synthetic Content Detection APIs

- 6.2.3.1.5. Others

- 6.2.3.2. SDKs & Developer Toolkits

- 6.2.3.2.1. Mobile SDKs

- 6.2.3.2.2. Web SDKs

- 6.2.3.2.3. Enterprise Integration SDKs

- 6.2.3.2.4. Others

- 6.2.3.3. Cloud Platforms

- 6.2.3.3.1. Cloud-native Detection Platforms

- 6.2.3.3.2. Model Hosting & Model-Inference Platforms

- 6.2.3.3.3. Scalable Compute Platforms for Heavy Detection

- 6.2.3.3.4. Others

- 6.2.3.1. API-Based Deepfake Detection

- 6.2.1. Solutions

- 7. Global Deepfake Detection Technology Market Analysis, by Deployment Mode

- 7.1. Key Segment Analysis

- 7.2. Deepfake Detection Technology Market Size (Value - US$ Bn), Analysis, and Forecasts, by Deployment Mode, 2021-2035

- 7.2.1. Cloud-Based

- 7.2.2. On-Premises

- 7.2.3. Hybrid

- 8. Global Deepfake Detection Technology Market Analysis, by Technology / Modality

- 8.1. Key Segment Analysis

- 8.2. Deepfake Detection Technology Market Size (Value - US$ Bn), Analysis, and Forecasts, by Technology / Modality, 2021-2035

- 8.2.1. Video deepfake detection

- 8.2.2. Image (photo) deepfake detection

- 8.2.3. Audio / voice deepfake detection

- 8.2.4. Text / synthetic text detection

- 8.2.5. Multi-modal detection (combined audio-video-text)

- 8.2.6. Others

- 9. Global Deepfake Detection Technology Market Analysis, by Detection Technique

- 9.1. Key Segment Analysis

- 9.2. Deepfake Detection Technology Market Size (Value - US$ Bn), Analysis, and Forecasts, by Detection Technique, 2021-2035

- 9.2.1. AI/ ML-based (CNNs, RNNs, Transformers)

- 9.2.1.1. CNNs

- 9.2.1.2. RNNs

- 9.2.1.3. Transformers

- 9.2.1.4. Others

- 9.2.2. Forensic Feature Analysis

- 9.2.3. Blockchain/ Provenance-based Verification

- 9.2.4. Watermarking & Fingerprinting

- 9.2.5. Hybrid/ Ensemble Methods

- 9.2.6. Others

- 9.2.1. AI/ ML-based (CNNs, RNNs, Transformers)

- 10. Global Deepfake Detection Technology Market Analysis, by Functionality/ Use Case

- 10.1. Key Segment Analysis

- 10.2. Deepfake Detection Technology Market Size (Value - US$ Bn), Analysis, and Forecasts, by Functionality/ Use Case, 2021-2035

- 10.2.1. Real-time / Live-stream detection

- 10.2.2. Post-event / forensic analysis

- 10.2.3. Content authentication / provenance

- 10.2.4. Source verification / origin tracing

- 10.2.5. Deepfake prevention / tampering alerts

- 10.2.6. Others

- 11. Global Deepfake Detection Technology Market Analysis, by Organization Size

- 11.1. Key Segment Analysis

- 11.2. Deepfake Detection Technology Market Size (Value - US$ Bn), Analysis, and Forecasts, by Organization Size, 2021-2035

- 11.2.1. Large enterprises

- 11.2.2. Small & Medium-sized Enterprises (SMEs)

- 11.2.3. Individual users / consumers (verification apps)

- 12. Global Deepfake Detection Technology Market Analysis, by Application / Use Case

- 12.1. Key Segment Analysis

- 12.2. Deepfake Detection Technology Market Size (Value - US$ Bn), Analysis, and Forecasts, by Application / Use Case, 2021-2035

- 12.2.1. Media & Entertainment

- 12.2.2. Social Media & Content Platforms

- 12.2.3. Banking, Financial Services & Insurance (fraud prevention)

- 12.2.4. Government & Public Sector (elections, national security)

- 12.2.5. Law Enforcement & Legal / eDiscovery

- 12.2.6. Healthcare & Telemedicine

- 12.2.7. Advertising & Brand Protection

- 12.2.8. Education & Research

- 12.2.9. Enterprise Communications (internal security)

- 12.2.10. Telecommunications

- 12.2.11. Others

- 13. Global Deepfake Detection Technology Market Analysis, by Industry Vertical

- 13.1. Key Segment Analysis

- 13.2. Deepfake Detection Technology Market Size (Value - US$ Bn), Analysis, and Forecasts, by Industry Vertical, 2021-2035

- 13.2.1. BFSI

- 13.2.2. Government & Defense

- 13.2.3. Media & Entertainment

- 13.2.4. Technology & IT Services

- 13.2.5. Healthcare & Life Sciences

- 13.2.6. Education

- 13.2.7. Retail & E-commerce

- 13.2.8. Legal & Compliance

- 13.2.9. Telecom & OTT Services

- 13.2.10. Advertising & Marketing

- 13.2.11. Others

- 14. Global Deepfake Detection Technology Market Analysis and Forecasts, by Region

- 14.1. Key Findings

- 14.2. Deepfake Detection Technology Market Size (Value - US$ Bn), Analysis, and Forecasts, by Region, 2021-2035

- 14.2.1. North America

- 14.2.2. Europe

- 14.2.3. Asia Pacific

- 14.2.4. Middle East

- 14.2.5. Africa

- 14.2.6. South America

- 15. North America Deepfake Detection Technology Market Analysis

- 15.1. Key Segment Analysis

- 15.2. Regional Snapshot

- 15.3. North America Deepfake Detection Technology Market Size Value - US$ Bn), Analysis, and Forecasts, 2021-2035

- 15.3.1. Component

- 15.3.2. Deployment Mode

- 15.3.3. Technology/ Modality

- 15.3.4. Detection Technique

- 15.3.5. Functionality/ Use Case

- 15.3.6. Organization Size

- 15.3.7. Application / Use Case

- 15.3.8. Industry Vertical

- 15.3.9. Country

- 15.3.9.1. USA

- 15.3.9.2. Canada

- 15.3.9.3. Mexico

- 15.4. USA Deepfake Detection Technology Market

- 15.4.1. Country Segmental Analysis

- 15.4.2. Component

- 15.4.3. Deployment Mode

- 15.4.4. Technology/ Modality

- 15.4.5. Detection Technique

- 15.4.6. Functionality/ Use Case

- 15.4.7. Organization Size

- 15.4.8. Application / Use Case

- 15.4.9. Industry Vertical

- 15.5. Canada Deepfake Detection Technology Market

- 15.5.1. Country Segmental Analysis

- 15.5.2. Component

- 15.5.3. Deployment Mode

- 15.5.4. Technology/ Modality

- 15.5.5. Detection Technique

- 15.5.6. Functionality/ Use Case

- 15.5.7. Organization Size

- 15.5.8. Application / Use Case

- 15.5.9. Industry Vertical

- 15.6. Mexico Deepfake Detection Technology Market

- 15.6.1. Country Segmental Analysis

- 15.6.2. Component

- 15.6.3. Deployment Mode

- 15.6.4. Technology/ Modality

- 15.6.5. Detection Technique

- 15.6.6. Functionality/ Use Case

- 15.6.7. Organization Size

- 15.6.8. Application / Use Case

- 15.6.9. Industry Vertical

- 16. Europe Deepfake Detection Technology Market Analysis

- 16.1. Key Segment Analysis

- 16.2. Regional Snapshot

- 16.3. Europe Deepfake Detection Technology Market Size (Value - US$ Bn), Analysis, and Forecasts, 2021-2035

- 16.3.1. Component

- 16.3.2. Deployment Mode

- 16.3.3. Technology/ Modality

- 16.3.4. Detection Technique

- 16.3.5. Functionality/ Use Case

- 16.3.6. Organization Size

- 16.3.7. Application / Use Case

- 16.3.8. Industry Vertical

- 16.3.9. Country

- 16.3.9.1. Germany

- 16.3.9.2. United Kingdom

- 16.3.9.3. France

- 16.3.9.4. Italy

- 16.3.9.5. Spain

- 16.3.9.6. Netherlands

- 16.3.9.7. Nordic Countries

- 16.3.9.8. Poland

- 16.3.9.9. Russia & CIS

- 16.3.9.10. Rest of Europe

- 16.4. Germany Deepfake Detection Technology Market

- 16.4.1. Country Segmental Analysis

- 16.4.2. Component

- 16.4.3. Deployment Mode

- 16.4.4. Technology/ Modality

- 16.4.5. Detection Technique

- 16.4.6. Functionality/ Use Case

- 16.4.7. Organization Size

- 16.4.8. Application / Use Case

- 16.4.9. Industry Vertical

- 16.5. United Kingdom Deepfake Detection Technology Market

- 16.5.1. Country Segmental Analysis

- 16.5.2. Component

- 16.5.3. Deployment Mode

- 16.5.4. Technology/ Modality

- 16.5.5. Detection Technique

- 16.5.6. Functionality/ Use Case

- 16.5.7. Organization Size

- 16.5.8. Application / Use Case

- 16.5.9. Industry Vertical

- 16.6. France Deepfake Detection Technology Market

- 16.6.1. Country Segmental Analysis

- 16.6.2. Component

- 16.6.3. Deployment Mode

- 16.6.4. Technology/ Modality

- 16.6.5. Detection Technique

- 16.6.6. Functionality/ Use Case

- 16.6.7. Organization Size

- 16.6.8. Application / Use Case

- 16.6.9. Industry Vertical

- 16.7. Italy Deepfake Detection Technology Market

- 16.7.1. Country Segmental Analysis

- 16.7.2. Component

- 16.7.3. Deployment Mode

- 16.7.4. Technology/ Modality

- 16.7.5. Detection Technique

- 16.7.6. Functionality/ Use Case

- 16.7.7. Organization Size

- 16.7.8. Application / Use Case

- 16.7.9. Industry Vertical

- 16.8. Spain Deepfake Detection Technology Market

- 16.8.1. Country Segmental Analysis

- 16.8.2. Component

- 16.8.3. Deployment Mode

- 16.8.4. Technology/ Modality

- 16.8.5. Detection Technique

- 16.8.6. Functionality/ Use Case

- 16.8.7. Organization Size

- 16.8.8. Application / Use Case

- 16.8.9. Industry Vertical

- 16.9. Netherlands Deepfake Detection Technology Market

- 16.9.1. Country Segmental Analysis

- 16.9.2. Component

- 16.9.3. Deployment Mode

- 16.9.4. Technology/ Modality

- 16.9.5. Detection Technique

- 16.9.6. Functionality/ Use Case

- 16.9.7. Organization Size

- 16.9.8. Application / Use Case

- 16.9.9. Industry Vertical

- 16.10. Nordic Countries Deepfake Detection Technology Market

- 16.10.1. Country Segmental Analysis

- 16.10.2. Component

- 16.10.3. Deployment Mode

- 16.10.4. Technology/ Modality

- 16.10.5. Detection Technique

- 16.10.6. Functionality/ Use Case

- 16.10.7. Organization Size

- 16.10.8. Application / Use Case

- 16.10.9. Industry Vertical

- 16.11. Poland Deepfake Detection Technology Market

- 16.11.1. Country Segmental Analysis

- 16.11.2. Component

- 16.11.3. Deployment Mode

- 16.11.4. Technology/ Modality

- 16.11.5. Detection Technique

- 16.11.6. Functionality/ Use Case

- 16.11.7. Organization Size

- 16.11.8. Application / Use Case

- 16.11.9. Industry Vertical

- 16.12. Russia & CIS Deepfake Detection Technology Market

- 16.12.1. Country Segmental Analysis

- 16.12.2. Component

- 16.12.3. Deployment Mode

- 16.12.4. Technology/ Modality

- 16.12.5. Detection Technique

- 16.12.6. Functionality/ Use Case

- 16.12.7. Organization Size

- 16.12.8. Application / Use Case

- 16.12.9. Industry Vertical

- 16.13. Rest of Europe Deepfake Detection Technology Market

- 16.13.1. Country Segmental Analysis

- 16.13.2. Component

- 16.13.3. Deployment Mode

- 16.13.4. Technology/ Modality

- 16.13.5. Detection Technique

- 16.13.6. Functionality/ Use Case

- 16.13.7. Organization Size

- 16.13.8. Application / Use Case

- 16.13.9. Industry Vertical

- 17. Asia Pacific Deepfake Detection Technology Market Analysis

- 17.1. Key Segment Analysis

- 17.2. Regional Snapshot

- 17.3. Asia Pacific Deepfake Detection Technology Market Size (Value - US$ Bn), Analysis, and Forecasts, 2021-2035

- 17.3.1. Component

- 17.3.2. Deployment Mode

- 17.3.3. Technology/ Modality

- 17.3.4. Detection Technique

- 17.3.5. Functionality/ Use Case

- 17.3.6. Organization Size

- 17.3.7. Application / Use Case

- 17.3.8. Industry Vertical

- 17.3.9. Country

- 17.3.9.1. China

- 17.3.9.2. India

- 17.3.9.3. Japan

- 17.3.9.4. South Korea

- 17.3.9.5. Australia and New Zealand

- 17.3.9.6. Indonesia

- 17.3.9.7. Malaysia

- 17.3.9.8. Thailand

- 17.3.9.9. Vietnam

- 17.3.9.10. Rest of Asia Pacific

- 17.4. China Deepfake Detection Technology Market

- 17.4.1. Country Segmental Analysis

- 17.4.2. Component

- 17.4.3. Deployment Mode

- 17.4.4. Technology/ Modality

- 17.4.5. Detection Technique

- 17.4.6. Functionality/ Use Case

- 17.4.7. Organization Size

- 17.4.8. Application / Use Case

- 17.4.9. Industry Vertical

- 17.5. India Deepfake Detection Technology Market

- 17.5.1. Country Segmental Analysis

- 17.5.2. Component

- 17.5.3. Deployment Mode

- 17.5.4. Technology/ Modality

- 17.5.5. Detection Technique

- 17.5.6. Functionality/ Use Case

- 17.5.7. Organization Size

- 17.5.8. Application / Use Case

- 17.5.9. Industry Vertical

- 17.6. Japan Deepfake Detection Technology Market

- 17.6.1. Country Segmental Analysis

- 17.6.2. Component

- 17.6.3. Deployment Mode

- 17.6.4. Technology/ Modality

- 17.6.5. Detection Technique

- 17.6.6. Functionality/ Use Case

- 17.6.7. Organization Size

- 17.6.8. Application / Use Case

- 17.6.9. Industry Vertical

- 17.7. South Korea Deepfake Detection Technology Market

- 17.7.1. Country Segmental Analysis

- 17.7.2. Component

- 17.7.3. Deployment Mode

- 17.7.4. Technology/ Modality

- 17.7.5. Detection Technique

- 17.7.6. Functionality/ Use Case

- 17.7.7. Organization Size

- 17.7.8. Application / Use Case

- 17.7.9. Industry Vertical

- 17.8. Australia and New Zealand Deepfake Detection Technology Market

- 17.8.1. Country Segmental Analysis

- 17.8.2. Component

- 17.8.3. Deployment Mode

- 17.8.4. Technology/ Modality

- 17.8.5. Detection Technique

- 17.8.6. Functionality/ Use Case

- 17.8.7. Organization Size

- 17.8.8. Application / Use Case

- 17.8.9. Industry Vertical

- 17.9. Indonesia Deepfake Detection Technology Market

- 17.9.1. Country Segmental Analysis

- 17.9.2. Component

- 17.9.3. Deployment Mode

- 17.9.4. Technology/ Modality

- 17.9.5. Detection Technique

- 17.9.6. Functionality/ Use Case

- 17.9.7. Organization Size

- 17.9.8. Application / Use Case

- 17.9.9. Industry Vertical

- 17.10. Malaysia Deepfake Detection Technology Market

- 17.10.1. Country Segmental Analysis

- 17.10.2. Component

- 17.10.3. Deployment Mode

- 17.10.4. Technology/ Modality

- 17.10.5. Detection Technique

- 17.10.6. Functionality/ Use Case

- 17.10.7. Organization Size

- 17.10.8. Application / Use Case

- 17.10.9. Industry Vertical

- 17.11. Thailand Deepfake Detection Technology Market

- 17.11.1. Country Segmental Analysis

- 17.11.2. Component

- 17.11.3. Deployment Mode

- 17.11.4. Technology/ Modality

- 17.11.5. Detection Technique

- 17.11.6. Functionality/ Use Case

- 17.11.7. Organization Size

- 17.11.8. Application / Use Case

- 17.11.9. Industry Vertical

- 17.12. Vietnam Deepfake Detection Technology Market

- 17.12.1. Country Segmental Analysis

- 17.12.2. Component

- 17.12.3. Deployment Mode

- 17.12.4. Technology/ Modality

- 17.12.5. Detection Technique

- 17.12.6. Functionality/ Use Case

- 17.12.7. Organization Size

- 17.12.8. Application / Use Case

- 17.12.9. Industry Vertical

- 17.13. Rest of Asia Pacific Deepfake Detection Technology Market

- 17.13.1. Country Segmental Analysis

- 17.13.2. Component

- 17.13.3. Deployment Mode

- 17.13.4. Technology/ Modality

- 17.13.5. Detection Technique

- 17.13.6. Functionality/ Use Case

- 17.13.7. Organization Size

- 17.13.8. Application / Use Case

- 17.13.9. Industry Vertical

- 18. Middle East Deepfake Detection Technology Market Analysis

- 18.1. Key Segment Analysis

- 18.2. Regional Snapshot

- 18.3. Middle East Deepfake Detection Technology Market Size (Value - US$ Bn), Analysis, and Forecasts, 2021-2035

- 18.3.1. Component

- 18.3.2. Deployment Mode

- 18.3.3. Technology/ Modality

- 18.3.4. Detection Technique

- 18.3.5. Functionality/ Use Case

- 18.3.6. Organization Size

- 18.3.7. Application / Use Case

- 18.3.8. Industry Vertical

- 18.3.9. Country

- 18.3.9.1. Turkey

- 18.3.9.2. UAE

- 18.3.9.3. Saudi Arabia

- 18.3.9.4. Israel

- 18.3.9.5. Rest of Middle East

- 18.4. Turkey Deepfake Detection Technology Market

- 18.4.1. Country Segmental Analysis

- 18.4.2. Component

- 18.4.3. Deployment Mode

- 18.4.4. Technology/ Modality

- 18.4.5. Detection Technique

- 18.4.6. Functionality/ Use Case

- 18.4.7. Organization Size

- 18.4.8. Application / Use Case

- 18.4.9. Industry Vertical

- 18.5. UAE Deepfake Detection Technology Market

- 18.5.1. Country Segmental Analysis

- 18.5.2. Component

- 18.5.3. Deployment Mode

- 18.5.4. Technology/ Modality

- 18.5.5. Detection Technique

- 18.5.6. Functionality/ Use Case

- 18.5.7. Organization Size

- 18.5.8. Application / Use Case

- 18.5.9. Industry Vertical

- 18.6. Saudi Arabia Deepfake Detection Technology Market

- 18.6.1. Country Segmental Analysis

- 18.6.2. Component

- 18.6.3. Deployment Mode

- 18.6.4. Technology/ Modality

- 18.6.5. Detection Technique

- 18.6.6. Functionality/ Use Case

- 18.6.7. Organization Size

- 18.6.8. Application / Use Case

- 18.6.9. Industry Vertical

- 18.7. Israel Deepfake Detection Technology Market

- 18.7.1. Country Segmental Analysis

- 18.7.2. Component

- 18.7.3. Deployment Mode

- 18.7.4. Technology/ Modality

- 18.7.5. Detection Technique

- 18.7.6. Functionality/ Use Case

- 18.7.7. Organization Size

- 18.7.8. Application / Use Case

- 18.7.9. Industry Vertical

- 18.8. Rest of Middle East Deepfake Detection Technology Market

- 18.8.1. Country Segmental Analysis

- 18.8.2. Component

- 18.8.3. Deployment Mode

- 18.8.4. Technology/ Modality

- 18.8.5. Detection Technique

- 18.8.6. Functionality/ Use Case

- 18.8.7. Organization Size

- 18.8.8. Application / Use Case

- 18.8.9. Industry Vertical

- 19. Africa Deepfake Detection Technology Market Analysis

- 19.1. Key Segment Analysis

- 19.2. Regional Snapshot

- 19.3. Africa Deepfake Detection Technology Market Size (Value - US$ Bn), Analysis, and Forecasts, 2021-2035

- 19.3.1. Component

- 19.3.2. Deployment Mode

- 19.3.3. Technology/ Modality

- 19.3.4. Detection Technique

- 19.3.5. Functionality/ Use Case

- 19.3.6. Organization Size

- 19.3.7. Application / Use Case

- 19.3.8. Industry Vertical

- 19.3.9. Country

- 19.3.9.1. South Africa

- 19.3.9.2. Egypt

- 19.3.9.3. Nigeria

- 19.3.9.4. Algeria

- 19.3.9.5. Rest of Africa

- 19.4. South Africa Deepfake Detection Technology Market

- 19.4.1. Country Segmental Analysis

- 19.4.2. Component

- 19.4.3. Deployment Mode

- 19.4.4. Technology/ Modality

- 19.4.5. Detection Technique

- 19.4.6. Functionality/ Use Case

- 19.4.7. Organization Size

- 19.4.8. Application / Use Case

- 19.4.9. Industry Vertical

- 19.5. Egypt Deepfake Detection Technology Market

- 19.5.1. Country Segmental Analysis

- 19.5.2. Component

- 19.5.3. Deployment Mode

- 19.5.4. Technology/ Modality

- 19.5.5. Detection Technique

- 19.5.6. Functionality/ Use Case

- 19.5.7. Organization Size

- 19.5.8. Application / Use Case

- 19.5.9. Industry Vertical

- 19.6. Nigeria Deepfake Detection Technology Market

- 19.6.1. Country Segmental Analysis

- 19.6.2. Component

- 19.6.3. Deployment Mode

- 19.6.4. Technology/ Modality

- 19.6.5. Detection Technique

- 19.6.6. Functionality/ Use Case

- 19.6.7. Organization Size

- 19.6.8. Application / Use Case

- 19.6.9. Industry Vertical

- 19.7. Algeria Deepfake Detection Technology Market

- 19.7.1. Country Segmental Analysis

- 19.7.2. Component

- 19.7.3. Deployment Mode

- 19.7.4. Technology/ Modality

- 19.7.5. Detection Technique

- 19.7.6. Functionality/ Use Case

- 19.7.7. Organization Size

- 19.7.8. Application / Use Case

- 19.7.9. Industry Vertical

- 19.8. Rest of Africa Deepfake Detection Technology Market

- 19.8.1. Country Segmental Analysis

- 19.8.2. Component

- 19.8.3. Deployment Mode

- 19.8.4. Technology/ Modality

- 19.8.5. Detection Technique

- 19.8.6. Functionality/ Use Case

- 19.8.7. Organization Size

- 19.8.8. Application / Use Case

- 19.8.9. Industry Vertical

- 20. South America Deepfake Detection Technology Market Analysis

- 20.1. Key Segment Analysis

- 20.2. Regional Snapshot

- 20.3. South America Deepfake Detection Technology Market Size (Value - US$ Bn), Analysis, and Forecasts, 2021-2035

- 20.3.1. Component

- 20.3.2. Deployment Mode

- 20.3.3. Technology/ Modality

- 20.3.4. Detection Technique

- 20.3.5. Functionality/ Use Case

- 20.3.6. Organization Size

- 20.3.7. Application / Use Case

- 20.3.8. Industry Vertical

- 20.3.9. Country

- 20.3.9.1. Brazil

- 20.3.9.2. Argentina

- 20.3.9.3. Rest of South America

- 20.4. Brazil Deepfake Detection Technology Market

- 20.4.1. Country Segmental Analysis

- 20.4.2. Component

- 20.4.3. Deployment Mode

- 20.4.4. Technology/ Modality

- 20.4.5. Detection Technique

- 20.4.6. Functionality/ Use Case

- 20.4.7. Organization Size

- 20.4.8. Application / Use Case

- 20.4.9. Industry Vertical

- 20.5. Argentina Deepfake Detection Technology Market

- 20.5.1. Country Segmental Analysis

- 20.5.2. Component

- 20.5.3. Deployment Mode

- 20.5.4. Technology/ Modality

- 20.5.5. Detection Technique

- 20.5.6. Functionality/ Use Case

- 20.5.7. Organization Size

- 20.5.8. Application / Use Case

- 20.5.9. Industry Vertical

- 20.6. Rest of South America Deepfake Detection Technology Market

- 20.6.1. Country Segmental Analysis

- 20.6.2. Component

- 20.6.3. Deployment Mode

- 20.6.4. Technology/ Modality

- 20.6.5. Detection Technique

- 20.6.6. Functionality/ Use Case

- 20.6.7. Organization Size

- 20.6.8. Application / Use Case

- 20.6.9. Industry Vertical

- 21. Key Players/ Company Profile

- 21.1. Adobe

- 21.1.1. Company Details/ Overview

- 21.1.2. Company Financials

- 21.1.3. Key Customers and Competitors

- 21.1.4. Business/ Industry Portfolio

- 21.1.5. Product Portfolio/ Specification Details

- 21.1.6. Pricing Data

- 21.1.7. Strategic Overview

- 21.1.8. Recent Developments

- 21.2. Amber Video

- 21.3. Clarifai

- 21.4. Deepware Scanner

- 21.5. Giant Oak

- 21.6. Google

- 21.7. HIVE

- 21.8. InVID / WeVerify

- 21.9. Meta

- 21.10. Microsoft

- 21.11. Pindrop

- 21.12. Reality Defender

- 21.13. Respeecher

- 21.14. Sensity (formerly Deeptrace)

- 21.15. Serelay

- 21.16. SRI International

- 21.17. Starling Labs

- 21.18. Truepic

- 21.19. Two Hat

- 21.20. ZeroFOX

- 21.21. Others Key Players

- 21.1. Adobe

- Company websites, annual reports, financial reports, broker reports, and investor presentations

- National government documents, statistical databases and reports

- News articles, press releases and web-casts specific to the companies operating in the market, Magazines, reports, and others

- We gather information from commercial data sources for deriving company specific data such as segmental revenue, share for geography, product revenue, and others

- Internal and external proprietary databases (industry-specific), relevant patent, and regulatory databases

- Governing Bodies, Government Organizations

- Relevant Authorities, Country-specific Associations for Industries

- Historical Trends – Past market patterns, cycles, and major events that shaped how markets behave over time. Understanding past trends helps predict future behavior.

- Industry Factors – Specific characteristics of the industry like structure, regulations, and innovation cycles that affect market dynamics.

- Macroeconomic Factors – Economic conditions like GDP growth, inflation, and employment rates that affect how much money people have to spend.

- Demographic Factors – Population characteristics like age, income, and location that determine who can buy your product.

- Technology Factors – How quickly people adopt new technology and how much technology infrastructure exists.

- Regulatory Factors – Government rules, laws, and policies that can help or restrict market growth.

- Competitive Factors – Analyzing competition structure such as degree of competition and bargaining power of buyers and suppliers.

- Identify and quantify factors that drive market changes

- Statistical modeling to establish relationships between market drivers and outcomes

- Understand regular cyclical patterns in market demand

- Advanced statistical techniques to separate trend, seasonal, and irregular components

- Identify underlying market growth patterns and momentum

- Statistical analysis of historical data to project future trends

- Gather deep industry insights and contextual understanding

- In-depth interviews with key industry stakeholders

- Prepare for uncertainty by modeling different possible futures

- Creating optimistic, pessimistic, and most likely scenarios

- Sophisticated forecasting for complex time series data

- Auto-regressive integrated moving average models with seasonal components

- Apply economic theory to market forecasting

- Sophisticated economic models that account for market interactions

- Harness collective wisdom of industry experts

- Structured, multi-round expert consultation process

- Quantify uncertainty and probability distributions

- Thousands of simulations with varying input parameters

- Data Source Triangulation – Using multiple data sources to examine the same phenomenon

- Methodological Triangulation – Using multiple research methods to study the same research question

- Investigator Triangulation – Using multiple researchers or analysts to examine the same data

- Theoretical Triangulation – Using multiple theoretical perspectives to interpret the same data

Deepfake Detection Technology Market by Component, Deployment Mode, Technology/ Modality, Detection Technique, Functionality/ Use Case, Organization Size, Application / Use Case, Industry Vertical and Geography

Insightified

Mid-to-large firms spend $20K–$40K quarterly on systematic research and typically recover multiples through improved growth and profitability

Research is no longer optional. Leading firms use it to uncover $10M+ in hidden revenue opportunities annually

Our research-consulting programs yields measurable ROI: 20–30% revenue increases from new markets, 11% profit upticks from pricing, and 20–30% cost savings from operations

Deepfake Detection Technology Market Size, Share & Trends Analysis Report by Component (Solutions, Services and Platforms & APIs), Deployment Mode, Technology/ Modality, Detection Technique, Functionality/ Use Case, Organization Size, Application / Use Case, Industry Vertical and Geography (North America, Europe, Asia Pacific, Middle East, Africa, and South America) – Global Industry Data, Trends, and Forecasts, 2026–2035

|

Market Structure & Evolution |

|

|

Segmental Data Insights |

|

|

Demand Trends |

|

|

Competitive Landscape |

|

|

Strategic Development |

|

|

Future Outlook & Opportunities |

|

Deepfake Detection Technology Market Size, Share, and Growth

The global deepfake detection technology market is experiencing robust growth, with its estimated value of USD 0.6 billion in the year 2025 and USD 15.1 billion by the period 2035, registering a CAGR of 37.2% during the forecast period. The deepfake detection technology market is spreading swiftly worldwide as it is being influenced by major factors which are changing the way safety and trust are maintained in the digital world.

Deepfakes are no longer just a tech problem - they’re a crisis of trust,” warns Jill Popelka, CEO of Darktrace. She revealed that she once received a voicemail during a board meeting that sounded exactly like her, even though she was present - a chilling reminder of how convincingly AI can clone a voice. Her team later replicated her voice using publicly available tools, underscoring how easy it is for deepfakes to exploit human vulnerability. As she put it, “These deepfakes… are very hard to protect from,” calling into focus the urgent need for robust detection and authentication systems in an age of synthetic media.

The rapid and complex creation of AI-generated synthetic media forced the companies, the governments, and the platforms to procure expensive and in-depth detection instruments. For instance, in February 2024, Microsoft broadened its Video Authenticator technology to include real-time detection of manipulated audio-visual content, thus providing support to frame-level and waveform anomalies identification in deepfakes. In the same way, Google, in June 2025, rolled out updated deepfake detection models under its SynthID framework, thereby facilitating watermarking and integrity checks for images, videos, and audio, which are running on its cloud platforms.

The abuse of AI technologies for the purpose of misinformation, manipulation, fraud, and identity spoofing, to name a few, has escalated tremendously, thereby the demand for detection systems has increased substantially.

Moreover, the introduction of the AI Act in the EU and discussions in the U.S. regarding platform accountability are some of the reasons why companies are compelled to install certified detection tools to maintain the authenticity of the content. With the combination of increased manipulation risks, the pressure from the regulators, and the enterprise awareness, this has become a growth driver for the technologies of deepfake detection and has been a trust-builder for the digital ecosystems.

There are also potential opportunities in the use of forensic AI tools, which include content authentication frameworks, secure media provenance systems, voice integrity verification solutions, and model watermarking technologies. By tapping into these adjacent sectors, vendors can build integrated integrity-tech ecosystems that are strong enough to withstand the continuous evolution of synthetic media threats.

Deepfake Detection Technology Market Dynamics and Trends

Driver: Rising Regulatory Pressure Accelerating Adoption of Deepfake Detection Technologies

Restraint: High Model Complexity and Evolving Manipulation Techniques Slowing Widespread Adoption

Opportunity: Expansion in Enterprise Security, Media Authentication, and Fraud Prevention

Key Trend: AI-Driven Forensics, Watermarking, and Real-Time Media Provenance

Deepfake Detection Technology Market Analysis and Segmental Data

“Media & Publishing Dominates Global Deepfake Detection Technology Market amid Escalating Misinformation Threats"

“North America Leads Deepfake Detection Technology Market with Advanced AI Forensics Adoption and Strong Regulatory Action"

Deepfake-Detection-Technology-Market Ecosystem

The deepfake detection technology market is witnessing an increasingly consolidated situation where a few major players such as Microsoft, Google, Meta, Truepic, and Sensity dominate the market by their advanced AI forensics, multimodal analysis, and automated content-authenticity tools. These companies focus on different areas of technology - from Google's SynthID watermarking system and Truepic's provenance-based authentication to Pindrop's voice-clone detection and Reality Defender's real-time deepfake scanning - thus, they keep the whole ecosystem innovating continuously.

Besides, government agencies and academia are not behind in this race. For example, in January 2024, the U.S. Department of Defense's DARPA broadened its Semantic Forensics (SemaFor) program to deepen learning–based detection of falsified video and audio, thus to elevate the national defense capabilities against synthetic media threats in a significant way.

The most influential players are turning their portfolio into a set of fully integrated solutions that combine provenance metadata, anomaly detection, and automated verification pipelines to enhance the workflow efficiency of enterprises, media organizations, and public agencies. In June 2025, Adobe has gone a step further in its Content Authenticity Initiative by embedding AI-driven tamper detection in the creative suite which resulted in a measurable improvement in the accuracy of image manipulation identification.

The innovations represented here, along with increasing regulatory pressure and cross-industry collaboration, keep deepfake detection at the core of global digital trust infrastructure.

Recent Development and Strategic Overview:

Report Scope

|

Attribute |

Detail |

|

Market Size in 2025 |

USD 0.6 Bn |

|

Market Forecast Value in 2035 |

USD 15.1 Bn |

|

Growth Rate (CAGR) |

37.2% |

|

Forecast Period |

2026 – 2035 |

|

Historical Data Available for |

2021 – 2024 |

|

Market Size Units |

USD Bn for Value |

|

Report Format |

Electronic (PDF) + Excel |

|

Regions and Countries Covered |

|||||

|

North America |

Europe |

Asia Pacific |

Middle East |

Africa |

South America |

|

|

|

|

|

|

|

|

Companies Covered |

|||||

|

|

|

|

|

|

|

Deepfake-Detection-Technology-Market Segmentation and Highlights

|

Segment |

Sub-segment |

|

Deepfake Detection Technology Market, By Component |

|

|

Deepfake Detection Technology Market, By Deployment Mode |

|

|

Deepfake Detection Technology Market, By Technology/ Technique |

|

|

Deepfake Detection Technology Market, By Detection Technique |

|

|

Deepfake Detection Technology Market, By Functionality/ Use Case |

|

|

Deepfake Detection Technology Market, By Organization Size |

|

|

Deepfake Detection Technology Market, By Application / Use Case |

|

|

Deepfake Detection Technology Market, By Industry Vertical |

|

Frequently Asked Questions

Table of Contents

Note* - This is just tentative list of players. While providing the report, we will cover more number of players based on their revenue and share for each geography

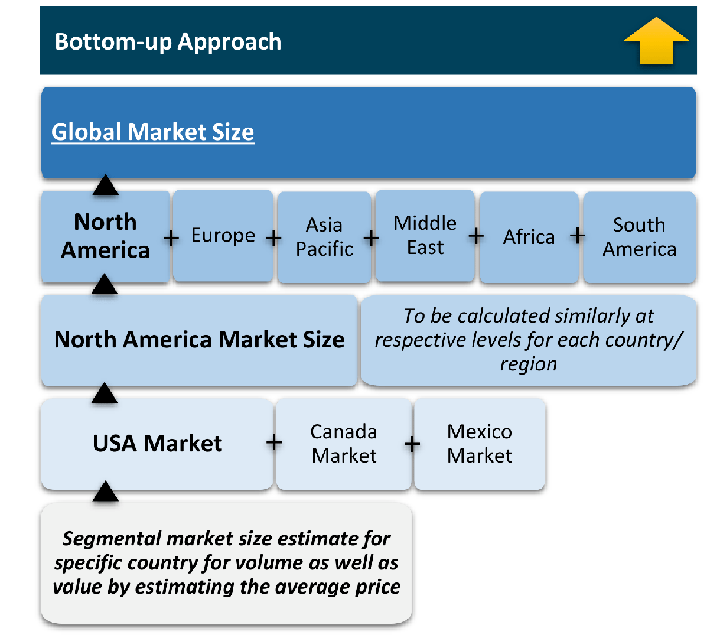

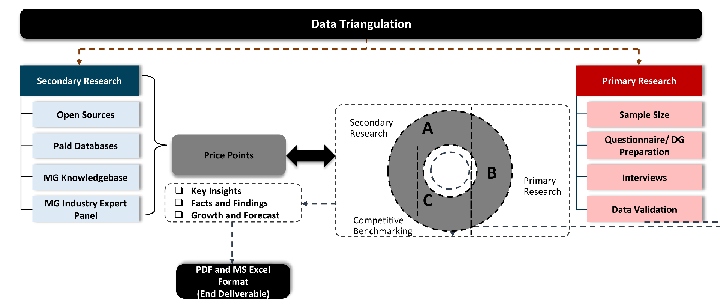

Research Design

Our research design integrates both demand-side and supply-side analysis through a balanced combination of primary and secondary research methodologies. By utilizing both bottom-up and top-down approaches alongside rigorous data triangulation methods, we deliver robust market intelligence that supports strategic decision-making.

MarketGenics' comprehensive research design framework ensures the delivery of accurate, reliable, and actionable market intelligence. Through the integration of multiple research approaches, rigorous validation processes, and expert analysis, we provide our clients with the insights needed to make informed strategic decisions and capitalize on market opportunities.

MarketGenics leverages a dedicated industry panel of experts and a comprehensive suite of paid databases to effectively collect, consolidate, and analyze market intelligence.

Our approach has consistently proven to be reliable and effective in generating accurate market insights, identifying key industry trends, and uncovering emerging business opportunities.

Through both primary and secondary research, we capture and analyze critical company-level data such as manufacturing footprints, including technical centers, R&D facilities, sales offices, and headquarters.

Our expert panel further enhances our ability to estimate market size for specific brands based on validated field-level intelligence.

Our data mining techniques incorporate both parametric and non-parametric methods, allowing for structured data collection, sorting, processing, and cleaning.

Demand projections are derived from large-scale data sets analyzed through proprietary algorithms, culminating in robust and reliable market sizing.

Research Approach

The bottom-up approach builds market estimates by starting with the smallest addressable market units and systematically aggregating them to create comprehensive market size projections.

This method begins with specific, granular data points and builds upward to create the complete market landscape.

Customer Analysis → Segmental Analysis → Geographical Analysis

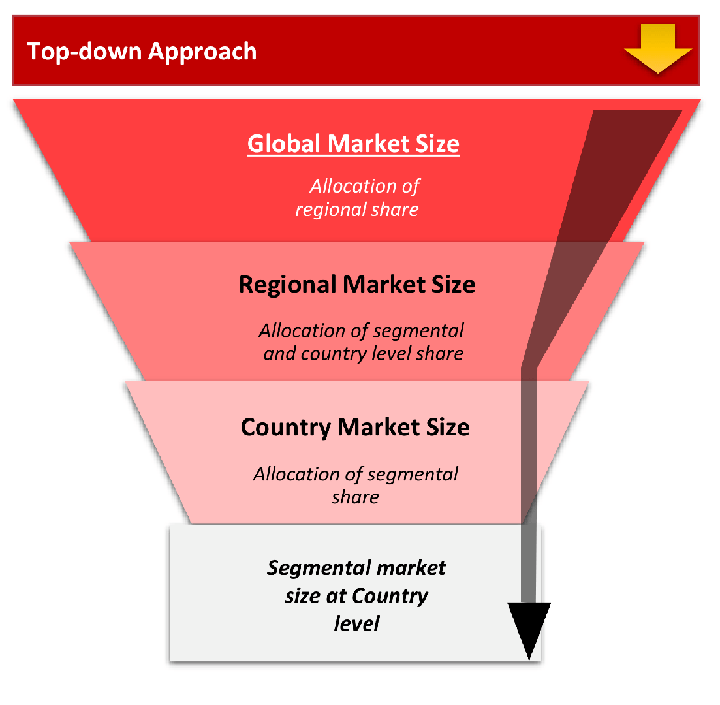

The top-down approach starts with the broadest possible market data and systematically narrows it down through a series of filters and assumptions to arrive at specific market segments or opportunities.

This method begins with the big picture and works downward to increasingly specific market slices.

TAM → SAM → SOM

Research Methods

Desk / Secondary Research

While analysing the market, we extensively study secondary sources, directories, and databases to identify and collect information useful for this technical, market-oriented, and commercial report. Secondary sources that we utilize are not only the public sources, but it is a combination of Open Source, Associations, Paid Databases, MG Repository & Knowledgebase, and others.

We also employ the model mapping approach to estimate the product level market data through the players' product portfolio

Primary Research

Primary research/ interviews is vital in analyzing the market. Most of the cases involves paid primary interviews. Primary sources include primary interviews through e-mail interactions, telephonic interviews, surveys as well as face-to-face interviews with the different stakeholders across the value chain including several industry experts.

| Type of Respondents | Number of Primaries |

|---|---|

| Tier 2/3 Suppliers | ~20 |

| Tier 1 Suppliers | ~25 |

| End-users | ~25 |

| Industry Expert/ Panel/ Consultant | ~30 |

| Total | ~100 |

MG Knowledgebase

• Repository of industry blog, newsletter and case studies

• Online platform covering detailed market reports, and company profiles

Forecasting Factors and Models

Forecasting Factors

Forecasting Models / Techniques

Multiple Regression Analysis

Time Series Analysis – Seasonal Patterns

Time Series Analysis – Trend Analysis

Expert Opinion – Expert Interviews

Multi-Scenario Development

Time Series Analysis – Moving Averages

Econometric Models

Expert Opinion – Delphi Method

Monte Carlo Simulation

Research Analysis

Our research framework is built upon the fundamental principle of validating market intelligence from both demand and supply perspectives. This dual-sided approach ensures comprehensive market understanding and reduces the risk of single-source bias.

Demand-Side Analysis: We understand end-user/application behavior, preferences, and market needs along with the penetration of the product for specific application.

Supply-Side Analysis: We estimate overall market revenue, analyze the segmental share along with industry capacity, competitive landscape, and market structure.

Validation & Evaluation

Data triangulation is a validation technique that uses multiple methods, sources, or perspectives to examine the same research question, thereby increasing the credibility and reliability of research findings. In market research, triangulation serves as a quality assurance mechanism that helps identify and minimize bias, validate assumptions, and ensure accuracy in market estimates.

Custom Market Research Services

We will customise the research for you, in case the report listed above does not meet your requirements.

Get 10% Free Customisation